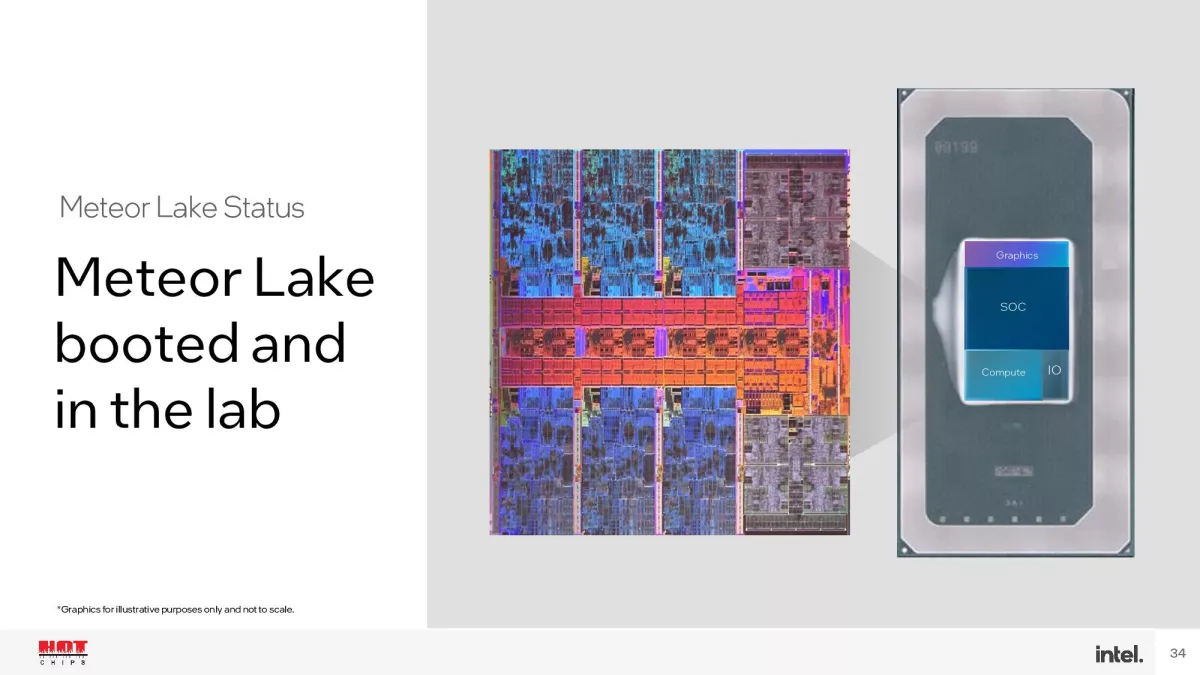

The Meteor Lake processors that will be released by the end of the year will be the first Intel processors to feature a hybrid design with chips/tiles from both Intel and TSMC. These processors will be available first in laptops with Intel’s focus on energy efficiency and high performance in native AI workloads, and shortly thereafter will be available for desktop computers.

Apple and AMD have already gone ahead with offering processors with built-in AI accelerators, while Microsoft is currently busy optimizing Windows to take advantage of the many custom AI accelerator engines.

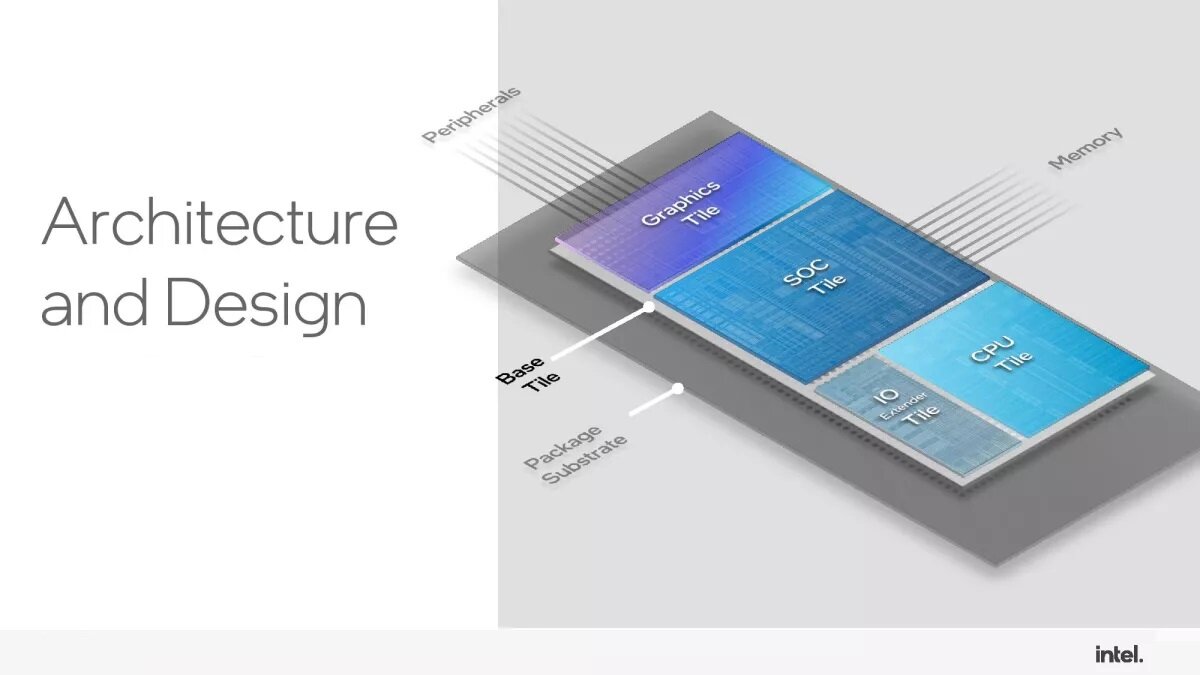

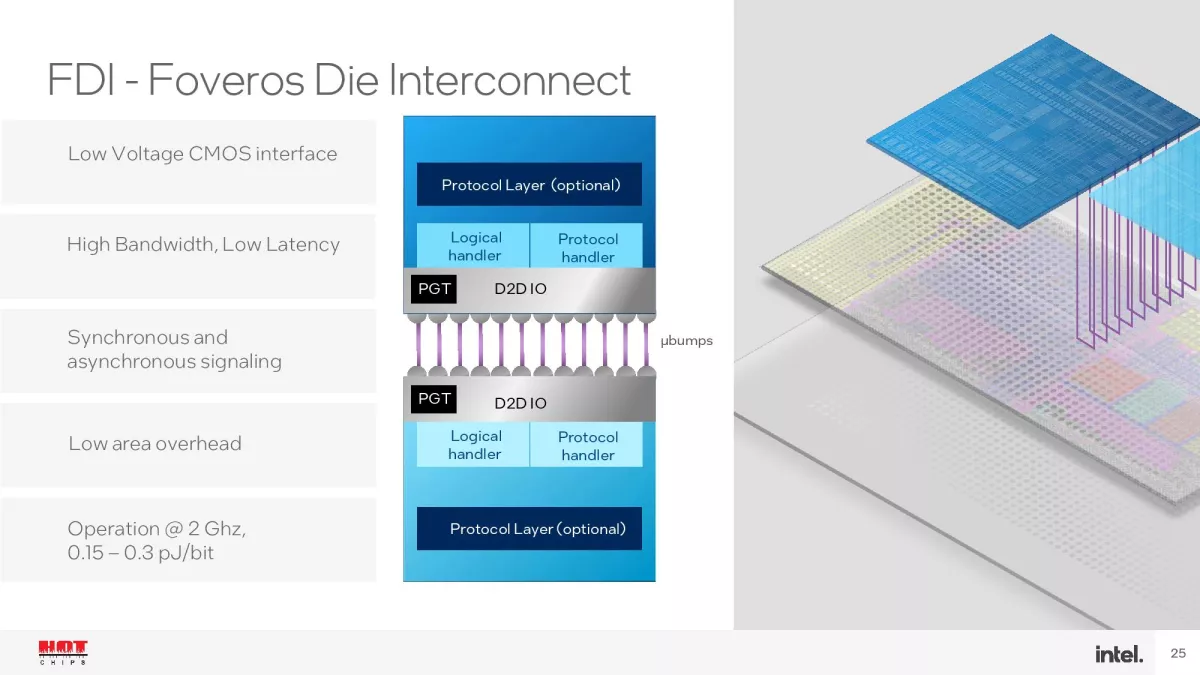

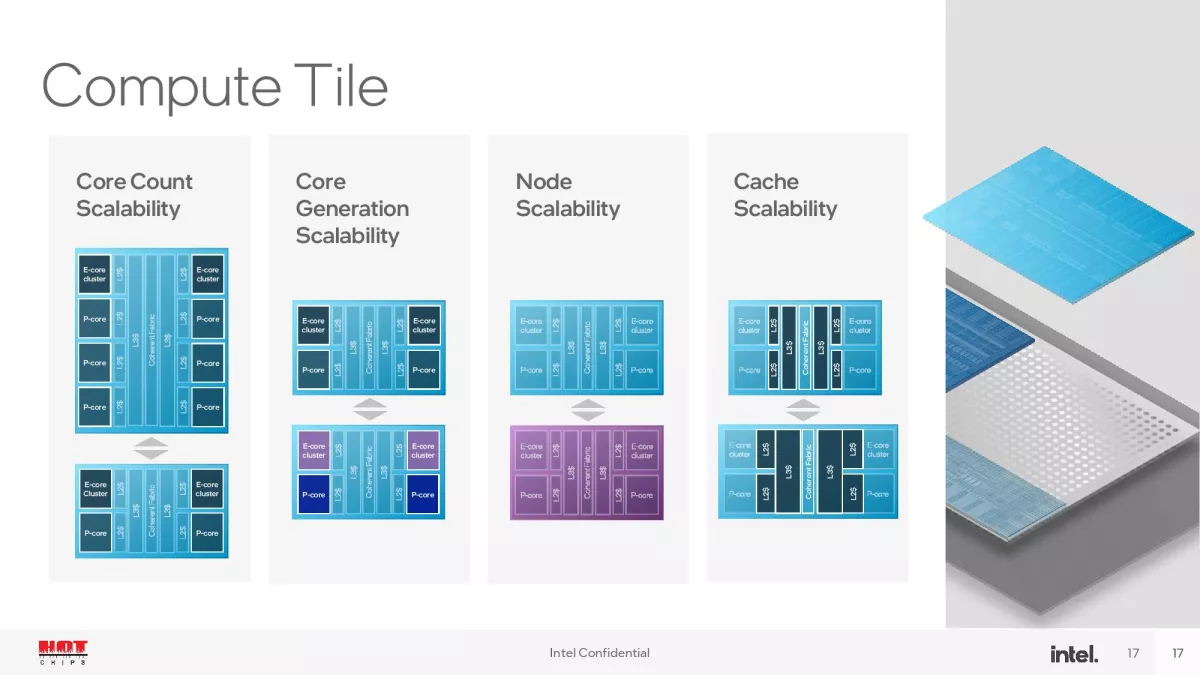

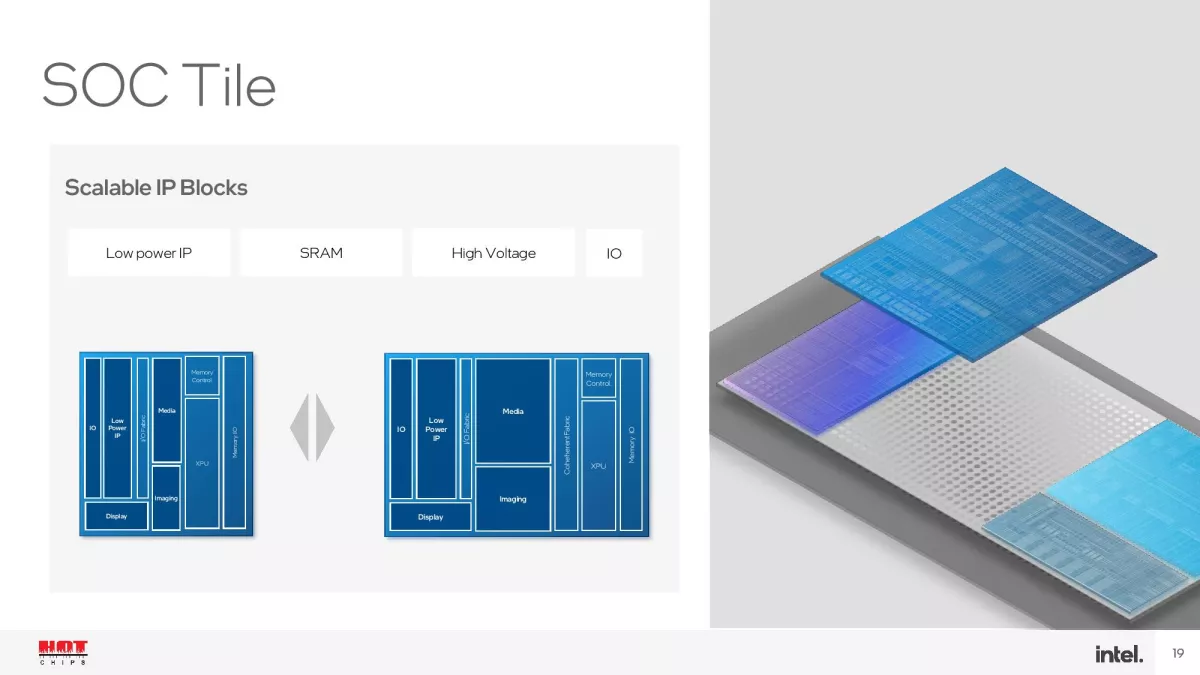

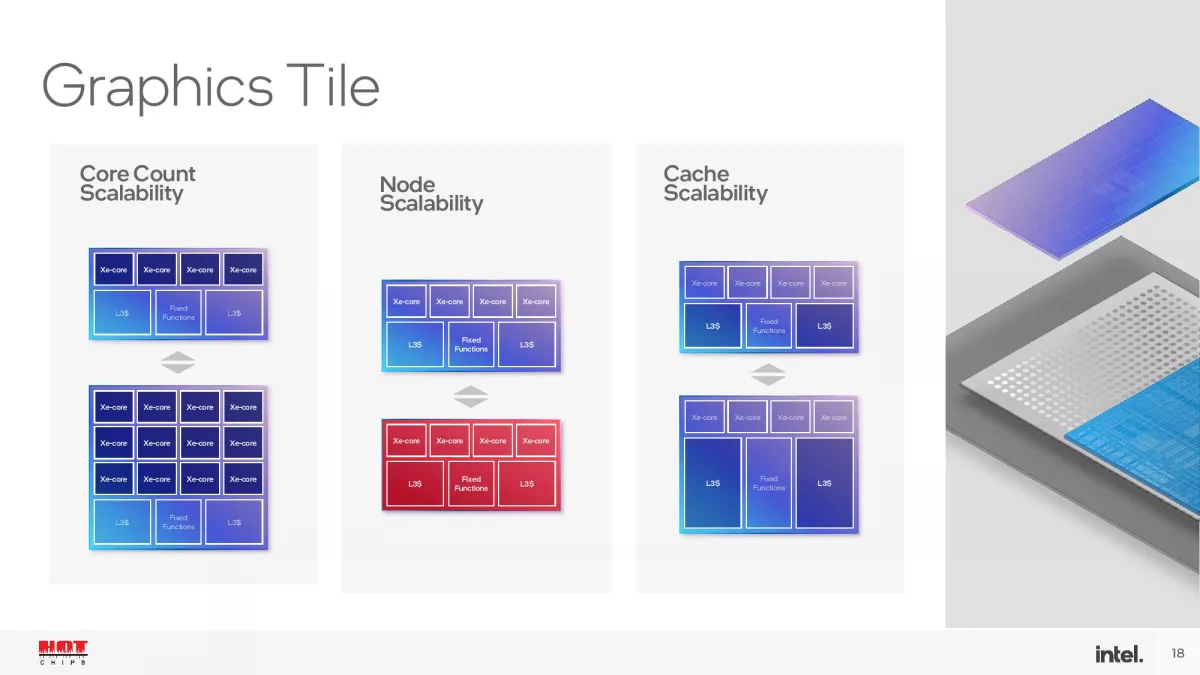

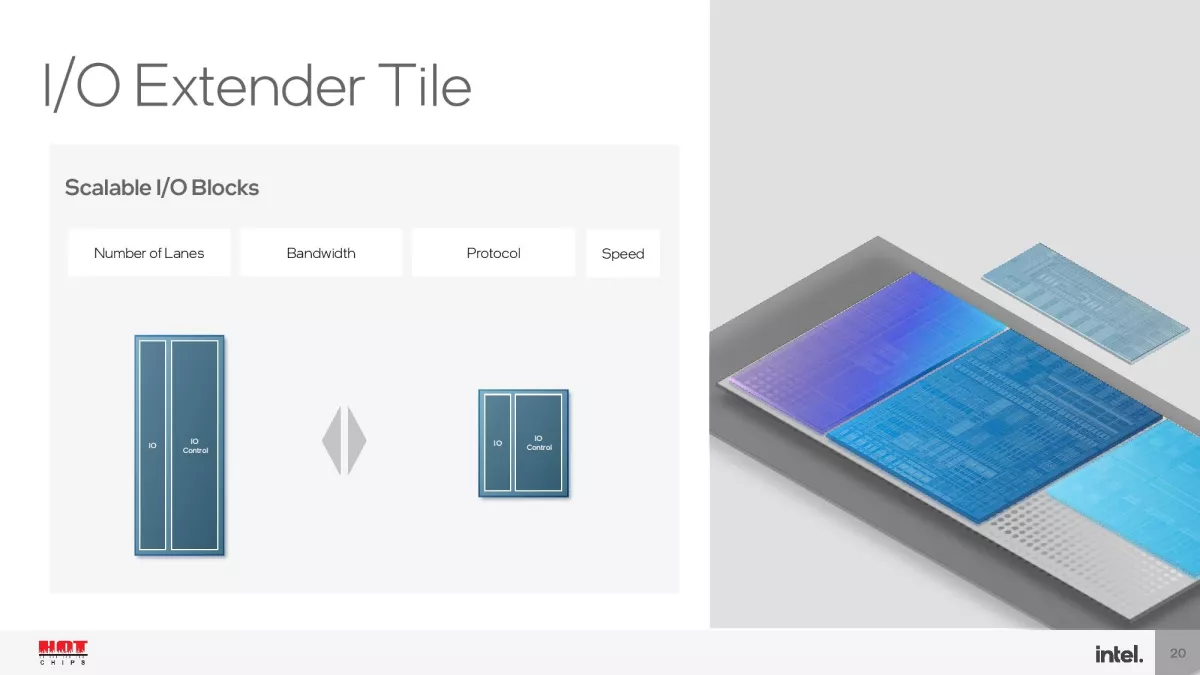

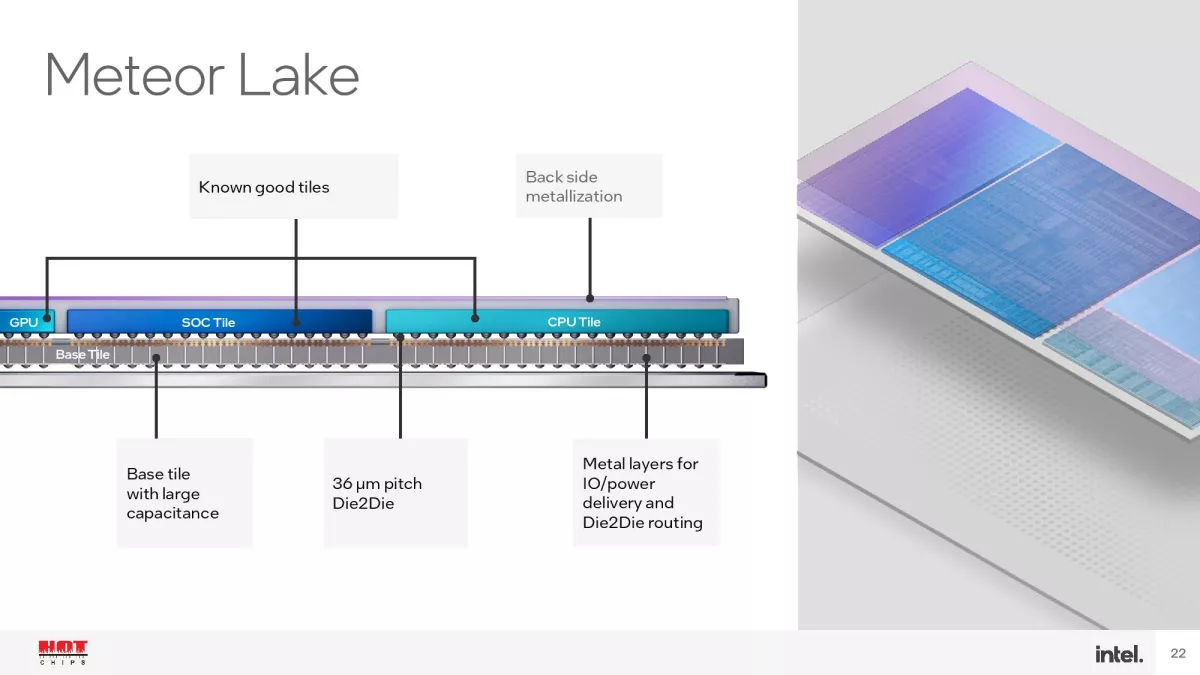

Codenamed Meteor Lake, the 14th generation Intel Core processors will be based on the chiplet design, using Intel’s Manufacturing Method 4 and TSMC’s N5 and N6 manufacturing methods. Using Intel’s Foveros Die Interconnect technology, these processors will include four modules/chips, CPU, GPU, SoC/VPU and I/O via an interconnect tool. The SoC/VPU is not just about accelerating AI applications as it also includes other hardware – for other functions – such as I/O Extender, Memory Controller, accelerator modules/GNA (Gaussian Neural Acceleration) and VPU Cores, etc. . This particular chip/tile will be manufactured using the TSMC N6 method.

While it will certainly take some time for developers to fully utilize the VPUs in Intel processors, the company is nonetheless banking on developing an ecosystem of AI applications on PCs. The company claims to have a significant market presence and scope to bring AI acceleration to the “mainstream” and points to a collaborative effort that has provided support for hybrid x86 processors called “Alder Lake” and “Raptor Lake” for Windows, on Linux and the wider ISV ecosystem .

Accelerating AI in modern operating systems and applications will undoubtedly be a challenge for the industry as a whole. The ability to run AI workloads natively won’t be of much value if, for example, developers find it difficult to develop applications (for example, because each manufacturer uses their own system and hardware to accelerate AI).

There must be a common link to support AI workloads natively, and here DirectML DirectX 12 machine learning acceleration libraries – an approach supported by Microsoft and AMD – will play an important role.

Intel’s VPU supports DirectML as well as ONNX and OpenVINO, which will bring higher performance to its processors (with developers needing to develop their software specifically for these standards if they want maximum performance).

Many of today’s most demanding AI workloads, such as large language models such as ChatGPT, require significant amounts of processing power and will therefore continue to run in large data centers.

However, Intel expresses concerns about data privacy or delays – in extracting results – and argues that many AI-related tasks and applications can be performed locally, such as processing audio, video, and images (for example with the Unreal Engine graphics engine).

-

5

-

2

“Avid problem solver. Extreme social media junkie. Beer buff. Coffee guru. Internet geek. Travel ninja.”

More Stories

Huge discounts on Xbox games on Steam

'I lost my soulmate': Actor Warwick Davies talks about losing his wife

I chose the same color as the float