In a new scientific study, an English radio astronomer questions whether artificial intelligence is one reason no other intelligent beings have been found in the universe, as he examines the length of time it would take for a civilization to be wiped out by 'runaways'. artificial intelligence.

The Fermi Paradox sums up this idea: In an infinite universe, how could there not be other civilizations sending us radio signals? Could it be that once civilizations develop artificial intelligence, it's a short road to oblivion for most of them? This type of large-scale event is called “big filters,” and AI is one of the most popular topics of speculation around it. Could the inevitable development of artificial intelligence (AI) create the eerie “Great Silence” we hear from the universe?

The “Great Liquidation” theory holds that other civilizations, perhaps many, existed throughout the history of the universe, but they all disappeared before they had the opportunity to contact Earth. In 1998, economist Robin Hanson said that the emergence of life, intelligence, and advanced civilization is a much rarer phenomenon than statistics indicate.

the Michael Garrett He is Radio astronomer at the University of Manchester And Director of the Jodrell Bank Center for Astrophysics, with extensive participation in the search for extraterrestrial intelligence (SETI). Essentially, although his research interests are eclectic, he is a highly specialized and approved version of people in TV series or movies who listen to the universe for signs of other cultures. In this paper which was evaluated by the same professors and Published in the Journal of the International Academy of Astronauticscompares theories about artificial superintelligence with specific observations using radio astronomy.

Garrett explains in his paper that scientists have become increasingly concerned as time passes without hearing any sign of the existence of other intelligent life. “This ‘Great Silence’ represents something of a paradox when compared to other astronomical findings that suggest the universe is hospitable to the emergence of intelligent life.”, he is writing. He points out that “the concept of the 'Great Filter' is often used – this is a global barrier and an insurmountable challenge that prevents the emergence of intelligent life on a large scale.”

There are countless potential “grand candidates,” from climate extinction to a devastating global pandemic. Any number of events could prevent global civilization from becoming multi-planetary. For people who follow and believe in grand liquidation theories ideologically, putting people on Mars or the Moon is a way to reduce risk. “The longer we are alone on Earth, the more likely it is that a major liquidation event will wipe us out,” the theory goes.

Today, artificial intelligence is unable to come close to human intelligence. But Garrett writes that artificial intelligence is doing tasks that humans previously did not think computers could do. If this path leads to so-called general artificial intelligence (GAI) – a key distinction that means a An algorithm that can think and synthesize ideas in a truly human way, coupled with amazing computing power – we could really be in trouble. In this paper, Garrett follows a series of hypothetical ideas to arrive at a possible outcome. How long will it take for civilization to be wiped out by uncontrolled AI?

Unfortunately, in Garrett's scenario, it only takes 100-200 years. He explains that programming and developing artificial intelligence is a single-purpose task that involves and is accelerated by data and processing power, compared to the messy, multi-dimensional task of space travel and colonization. “We see this dichotomy today with the influx of researchers in the IT fields compared to the scarcity in the life sciences. Every day on Twitter, billionaires talk loudly about how important it is to colonize Mars, but we still don’t know how humans will survive the journey without being ripped apart by cosmic radiation,” he says. Radio World: “Never mind that man behind the curtain.”

Garrett looks at a number of specific hypothetical scenarios and uses huge assumptions. It assumes that life exists in our galaxy and that AI and GAI are “natural developments” of these civilizations. It actually uses the hypothetical Drake Equation, a way to measure the potential number of civilizations on other planets, which has many variables that we have no specific idea about.

However, the hybrid hypothetical argument reaches a strong conclusion: the need for strict and ongoing regulation of AI. Garrett points out that there is a fear of placing artificial intelligence within a regulatory framework in terms of loss of productivity.

In Garrett's model, civilizations only have a few hundred years in the AI age before they disappear from the map. In terms of distance and the very long path of cosmic time, such small time margins mean almost nothing. It drops to zero, which, he says, matches the current SETI success rate of 0 percent. “Without practical regulation, there is every reason to believe that AI could pose a major threat to the future course of not only our technical civilization but all technical civilizations,” Garrett explains.

With information from Popular Mechanics

“Total alcohol fanatic. Coffee junkie. Amateur twitter evangelist. Wannabe zombie enthusiast.”

More Stories

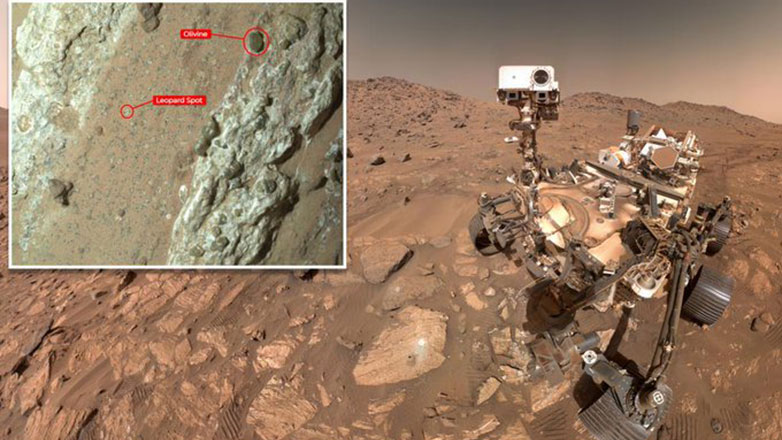

NASA: Signs of ancient life on Mars found in Chiava rock

Motorola Razr 50 Ultra Review – Review

6 Phrases That Show You’re Mentally Stronger Than Most People